High Availability NFS with DRBD and HeartBeat

DRBD allows you to create a mirror of two block devices that are located at two different sites across an IP network on Linux platform. DRBD replicates data on the primary device to the secondary device in a way that ensures that both copies of the data remain identical. DRBD works on top of block devices, i.e., hard disk partitions or LVM’s logical volumes. It mirrors each data block that it is written to disk to the peer node.

Mostly DRBD allows you to use any block device like this.

partition or complete hard disk

software RAID

Logical Volume Manager (LVM)

Enterprise Volume Management System (EVMS)

Heartbeat is a daemon that provides cluster infrastructure (communication and membership) services to its clients. This allows clients to know about the presence (or disappearance!) of peer processes on other machines and to easily exchange messages with them.

Use the following tutorial to configure High Availability nfs storage with DRBD and HeartBeat for your centos 6 server.

Redundant NFS with DRBD and HeartBeat Requirements

– Install NFS

Install NFS server if you were not installed.

#yum install nfs-utils nfs-utils-lib

– Two drives with same size (preferably)

– Networking between storage1 and storage2 servers.

– Working DNS resolution

ex:

/etc/hosts

10.1.1.2 storage1.clouddb.net

10.1.1.3 storage1.clouddb.net

– install NTP synchronized times on both server.

Install NTP command line if you were not installed.

#yum install ntp

– Set selinux Permissive mode

– Enable port 7788 on iptables.

We have used Virtual (VIP) ipaddress to configure heartbeat and prepare the following server details and partition before proceed.

storage1.clouddb.net

IP : 10.1.1.2

storage2.clouddb.net

IP : 10.1.1.3

Virtual (VIP) ipaddress : 10.1.1.4

Same partition on both server

/dev/sdb1

Update /data folder on NFS share /etc/exports file.

ex:

/data 10.1.1.1/24(rw,no_root_squash,no_all_squash,sync)

How to install DRBD with HeartBeat NFS share.

Update EL repository for yum installed on both servers.

storage1/storage2

#rpm -Uvh http://www.elrepo.org/elrepo-release-6-6.el6.elrepo.noarch.rpm

Update repository on servers.

storage1/storage2

#yum update -y

Install & Configure DRBD

Ready to install and load the DRBD and its Utils using yum. Install on storage1, storage2 both servers.

storage1/storage2

# yum -y install drbd83-utils kmod-drbd83

Insert drbd module manually on both machines or reboot:

storage1/storage2

#modprobe drbd

Next we need to create a new DRBD resource file by creating /etc/drbd.d/loadbalance.res. Make sure to use the correct IP address and devices for your server. As resource name you can use whatever you like.

#vi /etc/drbd.d/loadbalance.res

resource loadbalance

{

startup {

wfc-timeout 30;

outdated-wfc-timeout 20;

degr-wfc-timeout 30;

}

net {

cram-hmac-alg sha1;

shared-secret sync_disk;

}

syncer {

rate 10M;

al-extents 257;

on-no-data-accessible io-error;

}

on storage1.cloudkb.net {

device /dev/drbd0;

disk /dev/xvdc1;

address 10.1.1.2:7788;

meta-disk internal;

}

on storage2.cloudkb.net {

device /dev/drbd0;

disk /dev/xvdb1;

address 10.1.1.3:7788;

meta-disk internal;

} }

Copy /etc/drbd.d/loadbalance.res on storage2 server.

scp /etc/drbd.d/loadbalance.res storage2.clouddb.net:/etc/drbd.d//etc/drbd.d/loadbalance.res

Initialize the DRBD meta data storage on both machines:

storage1/storage2

# drbdadm create-md loadbalance

Check below error section if you have any errors.

Start the drdb on both servers:

storage1/storage2

# service drbd start

On the primary ( Storage1 ) node run drbdadm command:

#drbdadm primary loadbalance

On the secondary ( storage2 )node run drbdadm command:

#drbdadm secondary loadbalance

You can check the initial synchronization progress by checking /proc/drbd.

example,

[root@storage1 ~]# cat /proc/drbd

version: 8.4.4 (api:1/proto:86-101)

GIT-hash: 599f286440bd633d15d5ff985204aff4bccffadd build by phil@Build64R6, 2013-10-14 15:33:06

0: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r—n-

ns:788174376 nr:72 dw:11979700 dr:776199088 al:3180 bm:47383 lo:0 pe:3 ua:2 ap:0 ep:1 wo:f oos:22787584

[==================>.] sync’ed: 97.2% (22252/780380)M

finish: 0:46:12 speed: 8,204 (8,192) K/sec

When the initial sync is finished, the output should look like this:

version: 8.3.16 (api:88/proto:86-97)

GIT-hash: a798fa7e274428a357657fb52f0ecf40192c1985 build by phil@Build64R6, 2013-09-27 16:00:43

0: cs:Connected ro:Secondary/Primary ds:Diskless/UpToDate C r—–

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:0

Almost done, You should create filesystem on Distributed Replicated Block Device device and mount it.

# mkfs.ext4 /dev/drbd0

# mkdir /data

# mount /dev/drbd0 /data

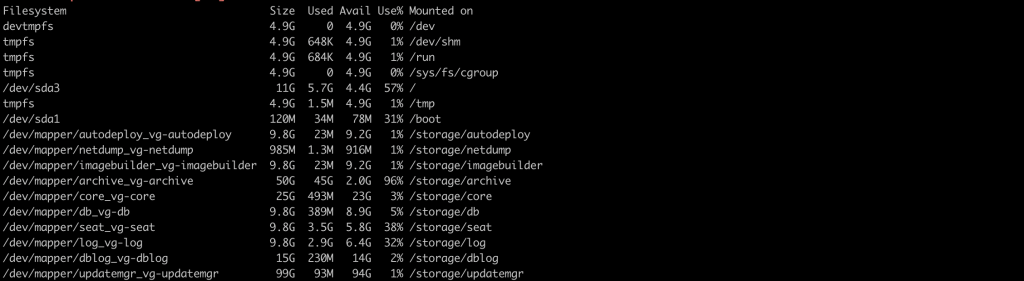

[root@storage1 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/VolGroup-lv_root

29G 5.0G 22G 19% /

tmpfs 245M 0 245M 0% /dev/shm

/dev/sda1 485M 53M 407M 12% /boot

/dev/drbd0 5.0G 139M 4.6G 3% /data

You don’t need to mount the disk from storage1 machines. All your data write on /data folder will be synced to storage2. To check this data write, unmount /data folder on storage1 and mount to storage2

[root@storage1 ~]#drbdadm secondary loadbalance [root@storage2 ~]#drbdadm primary loadbalance

[root@storage2 ~]#mount /dev/drbd0 /data

How to install HeartBeat for DRBD.

Bofore proceed to install heatbeat monitoring, you should unmount the /data folder.

Install heartbeat through yum on both servers.

storage1/storage2

# yum install heartbeat

If you were received error to download heartbeat.

Setting up Install Process

No package heartbeat available.

Enable EPEL Repository in RHEL / CentOS 6 and then install heartbeat through yum.

## RHEL/CentOS 6 32-Bit ##

# wget http://download.fedoraproject.org/pub/epel/6/i386/epel-release-6-8.noarch.rpm

# rpm -ivh epel-release-6-8.noarch.rpm

## RHEL/CentOS 6 64-Bit ##

# wget http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

# rpm -ivh epel-release-6-8.noarch.rpm

Once the heartbeat installation completed, update the following configuraiton on both server.

storage1/storage2

vi /etc/ha.d/ha.cf

# Heartbeat logging configuration

logfacility daemon

# Heartbeat cluster members

node storage1.clouddb.net

node storage2.clouddb.net

# Heartbeat communication timing

keepalive 1

warntime 10

deadtime 30

initdead 120

# Heartbeat communication paths

udpport 694

ucast eth0 10.1.1.2 # make sure this ips are on eth0

ucast eth0 10.1.1.3

baud 19200

# fail back automatically

auto_failback on

Now we need to add the auth key /etc/ha.d/authkeys as follows,

storage1/storage2

vi /etc/ha.d/authkeys

auth 3

3 md5 gunerc01056

Make it little secure permission.

# chmod 600 /etc/ha.d/authkeys

Configure the Virtal IP 10.1.1.4 on heartbeat resource.

storage1

vi /etc/heartbeat/haresources

storage1.clouddb.net IPaddr::10.1.1.4/24 drbddisk::loadbalance Filesystem::/dev/drbd0::/data::ext4 nfs

storage2

storage2.clouddb.net IPaddr::10.1.1.4/24 drbddisk::loadbalance Filesystem::/dev/drbd0::/data::ext4 nfs

Thats all!!!.. You have completed the setup. Try to restart heartbeat service.

#/etc/init.d/heartbeat start

You can monitor the log from /var/log/messages.

You should check whether storage server have mounted /data folder and you can use 10.1.1.4 ip address to mount NFS on your another servers.

Note : check 10.1.1.4 ping request whether its working.

For example:

You can mount /data folder on Host machine.

[root@host1 ~]#mount -t nfs 10.1.1.4:/data /storage

Errors :-

If you have any issue with drdb synchronize, On the primary only run the following command to initialize the synchronization between the two nodes.

drbdadm primary –force loadbalance

Checking the status reveals:

cat /proc/drbd

version: 8.3.11 (api:88/proto:86-96)

srcversion: DA5A13F16DE6553FC7CE9B2

0: cs:Connected ro:Secondary/Secondary ds:Inconsistent/Inconsistent C r—–

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:1953460984

The reason for this is because neither server can tell if it has the correct data, so we need to invalidate one of them, so the other one thinks it is up-to-date. On the secondary server, simple use this command.

Fix:

sudo drbdadm invalidate loadbalance

[root@storage1 ~]# drbdadm create-md loadbalance

md_offset 960197087232

al_offset 960197054464

bm_offset 960167747584

Found ext3 filesystem

937692472 kB data area apparently used

937663816 kB left usable by current configuration

Device size would be truncated, which

would corrupt data and result in

‘access beyond end of device’ errors.

You need to either

* use external meta data (recommended)

* shrink that filesystem first

* zero out the device (destroy the filesystem)

Operation refused.

Command ‘drbdmeta 0 v08 /dev/sdb1 internal create-md’ terminated with exit code 40

drbdadm create-md tld6drbd: exited with code 40

Fix:

dd if=/dev/zero of=/dev/sdb1 bs=1M count=128

DRBD & mkfs: Wrong medium type while trying to determine filesystem size

[root@primary05 ~]# mkfs -t ext4 /dev/drbd0mke2fs 1.41.12 (17-May-2010)

mkfs.ext4: Wrong medium type while trying to determine filesystem size

Cause:

The state of your device is kept in /proc/drbd:

[root@primary05 ~]# cat /proc/drbdversion: 8.3.7 (api:88/proto:86-91)

srcversion: EE47D8BF18AC166BE219757

0: cs:Connected ro:Secondary/Primary ds:UpToDate/UpToDate C r—-

ns:0 nr:18104264 dw:18100168 dr:0 al:0 bm:0 lo:1024 pe:0 ua:1025 ap:0 ep:2 wo:b oos:0

The part ro:Secondary/Primary tells you what both nodes are set to.

Fix:

Switch the DRBD block device to Primary using drbdadm:

# drbdadm primary loadbalance

If you have any problem to sync between two servers, then check the /var/log/message.

#cat /proc/drbd

version: 8.4.0 (api:1/proto:86-100)

GIT-hash: 28753f559ab51b549d16bcf487fe625d5919c49c build by gardner@, 2011-12-1

2 23:52:00

0: cs:StandAlone ro:Secondary/Unknown ds:UpToDate/DUnknown r—–

ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:b oos:76

Errors like in /var/log/messages

Jul 9 10:30:17 primary06 kernel: block drbd0: Split-Brain detected but unresolved, dropping connection!

Fix:

Step 1: Start drbd manually on both nodes

Step 2: Define one node as secondary and discard data on this

drbdadm secondary all

drbdadm disconnect all

drbdadm — –discard-my-data connect all

Step 3: Define another node as primary and connect

drbdadm primary all

drbdadm disconnect all

drbdadm connect all

[root@artifactory ~]# drbdadm adjust clusterdb

No valid meta data found

Command ‘drbdmeta 1 v08 /dev/xvdb1 0 apply-al’ terminated with exit code 255

[root@artifactory ~]# drbdadm -d adjust all

drbdmeta 1 v08 /dev/xvdb1 0 apply-al

drbdsetup attach 1 /dev/xvdf1 /dev/xvdb1 0